OceanBase MySQL Migration Synchronization to Datahub

NineData data replication supports the efficient migration or real-time synchronization of OceanBase MySQL data to DataHub, ensuring seamless data integration and enabling data consolidation and analysis across multiple scenarios.

Prerequisites

- The source and target data sources have been added to NineData. For instructions on how to add data sources, see Add Data Source.

- The source database type is OceanBase MySQL.

- The target data source type is Datahub.

Usage Limitations

- Before performing data synchronization, it is necessary to assess the performance of the source and target data sources, and it is recommended to perform data synchronization during off-peak business hours. Otherwise, the full data initialization will occupy a certain amount of read and write resources of the source and target data sources, leading to increased database load.

- It is necessary to ensure that each table in the synchronization object has a primary key or unique constraint, and the column names are unique; otherwise, the same data may be synchronized repeatedly.

Operation Steps

NineData’s data replication product has been commercialized. You can still use 10 replication tasks for free, with the following considerations:

Among the 10 replication tasks, you can include 1 Incremental task, with a specification of Micro.

Tasks with a status of Terminated do not count towards the 10-task limit. If you have already created 10 replication tasks and want to create more, you can terminate previous replication tasks and then create new ones.

When creating replication tasks, you can only select the Spec you have purchased. Specifications that have not been purchased will be grayed out and cannot be selected. If you need to purchase additional specifications, please contact us through the customer service icon at the bottom right of the page.

Log in to the NineData Console.

In the left navigation bar, click Replication > Data Replication.

On the Replication page, click Create Replication in the top right corner.

On the Source & Target tab, configure the settings as shown below, then click Next.

Parameter Description Name Enter a name for the replication task. For easier search and management, use a meaningful name. Maximum 64 characters. Source The data source containing the object to replicate. Select the data source that holds the data to be copied. Target The target data source to receive the object. Select the destination Datahub data source. Datahub Project Select the target Datahub Project. Data from the source will be written into this specified project. Type Select the content to be replicated to the target data source. - Schema: Only replicate the schema (database and table structure), not the data.

- Full: Replicate all objects and data from the source, i.e., full data replication. The switch on the right enables periodic full replication. For more information, see Periodic Full Replication.

- Incremental: After full replication, perform incremental replication based on the source's logs. The

icon allows you to configure the incremental operation types. You can uncheck specific operation types to exclude them from incremental replication.

Spec (Unavailable when using Schema) Optional only when the task contains Full or Incremental.

The specifications of the replication task determine the replication speed: the larger the specification, the higher the replication rate. Hover over the

icon to view the replication rate and configuration details for each specification. Each specification displays the available quantity and the total number of specifications. If the available quantity is 0, it will be grayed out and cannot be selected.

Incremental Started Required only when Type is set to Incremental. - From Started: Use the task start time as the starting point for incremental replication.

- Customized Time: Select a custom start time for incremental replication. You can also specify the time zone based on your region. If the start time is earlier than the task's start time and includes DDL operations, the task may fail.

If target table already exists - Pre-Check Error and Stop Task: If data exists in the target table during the pre-check, the task stops.

- Ignore the existing data and append : If data exists, skip the conflicting data and append the rest.

On the Objects tab, configure the parameters below, then click Next.

Parameter Description Replication Objects Select the content to replicate. You can choose All Objects to replicate everything in the source database, or Customized Object to select specific objects from Source Object and click > to add them to the Target Object list.

If you need to create multiple replication tasks with the same objects, you can create a config file and import it when creating new tasks. Click Import Config in the top right, then click Download Template to download the config file template. After editing, upload it using Upload to import the configurations in bulk. Config file details:

| Parameter | Description |

|---|---|

source_table_name | Name of the source table containing the object to replicate. |

destination_table_name | Name of the target table to receive the object. |

source_schema_name | Schema name of the source object. |

destination_schema_name | Schema name of the target object. |

source_database_name | Database name of the source object. |

target_database_name | Target database name. |

column_list | List of columns to be replicated. |

extra_configuration | Additional configuration information can be set here:

|

Example of

extra_configuration:{

"extra_config": {

"column_rules": [

{

"column_name": "created_time", // Original column name to map.

"destination_column_name": "migrated_time", // Target column name mapped to "migrated_time".

"column_value": "current_timestamp()" // Change the column value to the current timestamp.

}

],

"filter_condition": "id != 0" // Only rows where ID is not 0 will be synchronized.

}

}Refer to the downloaded template for a complete configuration file example.

On the "Mapping" tab, you can configure each table individually. Click Column selection and processing configuration next to a target table to configure its columns.

If either source or target metadata has changed, click Refresh Metadata in the top right to refresh the metadata. Once done, click Save and Pre-Check.

Inside Column selection and processing configuration, you can also configure System Field Configuration. Since Datahub is a queue-based system and does not support random UPDATE/DELETE operations, NineData adds the following metadata fields to every record for identification:

Metadata Field Value _record_id_${nd_record_id} _operation_type_${nd_operation_type} _execution_time_${nd_exec_timestamp} _before_image_${nd_before_image} _after_image_${nd_after_image}

You may also customize metadata field names or values as needed. For more details, refer to the Appendix.

On the Pre-check tab, wait for the system to complete the pre-check. Once it passes, click Launch.

You can check Enable data consistency comparison to automatically start a data consistency comparison after the replication completes. Depending on your selection of Type, the timing for this comparison task is as follows:

Schema: Starts after schema replication.

Schema + Full, or Full: Starts after full replication.

Schema + Full + Incremental, or Incremental: Starts once incremental data matches the source and Delay is 0 seconds. You can click View Details to check the sync delay on the Details page.

If pre-check fails, click Details in the Actions column to troubleshoot. After fixing the issue, click Check Again to retry until the check passes.

If Result shows Warning, you may choose to fix or ignore it based on the situation.

On the Launch page, once you see Launch Successfully, the replication task has started. You can:

- Click View Details to view the task’s execution details.

- Click Back to list to return to the Replication task list page.

View Synchronization Results

Log in to the NineData Console.

Click on Replication > Data Replication in the left navigation bar.

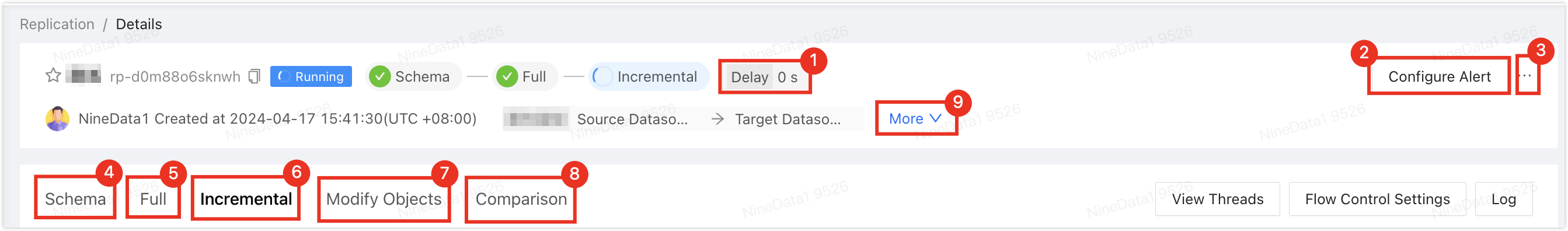

On the Replication page, click on the Task ID of the target synchronization task, the page details are as follows.

Number Function Description 1 Sync Delay The data synchronization delay between the source data source and the target data source. 0 seconds means there is no delay between the two ends, at which point you can choose to switch the business to the target data source for smooth migration. 2 Configure Alerts After configuring alerts, the system will notify you in the way you choose when the task fails. For more information, please refer to Operations Monitoring Introduction. 3 More - Pause: Pause the task, only tasks with Running status are selectable.

- Duplicate: Create a new replication task with the same configuration as the current task.

- Terminate: End tasks that are unfinished or listening (i.e., in incremental synchronization). Once the task is terminated, it cannot be restarted, so please proceed with caution. If the synchronization objects contain triggers, a trigger replication option will pop up, please select as needed.

- Delete: Delete the task. Once the task is deleted, it cannot be recovered, so please proceed with caution.

4 Structure Replication (displayed in scenarios involving structure replication) Displays the progress and detailed information of structure replication. - Click on Log on the right side of the page: View the execution logs of structure replication.

- Click on

on the right side of the page: View the latest information.

- Click on View DDL in the Actions column on the right side of the target object in the list: View SQL playback.

5 Full Replication (displayed in scenarios involving full replication) Displays the progress and detailed information of full replication. - Click on Monitor on the right side of the page: View various monitoring indicators during full replication. During full replication, you can also click on Flow Control Settings on the right side of the monitoring indicator page to limit the rate of writing to the target data source per second. The unit is rows/second.

- Click on Log on the right side of the page: View the execution logs of full replication.

- Click on

on the right side of the page: View the latest information.

6 Incremental Replication (displayed in scenarios involving incremental replication) Displays various monitoring indicators of incremental replication. - Click on View Threads on the right side of the page: View the operations currently being executed by the current replication task, including:

- Thread ID: Replication tasks are executed in multiple threads, displaying the current thread number in progress.

- Execute SQL: Details of the SQL statement currently being executed by the current thread.

- Response Time: The response time of the current thread. If this value increases, it indicates that the current thread may be stuck for some reason.

- Event Time: The timestamp when the current thread was started.

- Status: The status of the current thread.

- Click on Flow Control Settings on the right side of the page: Limit the rate of writing to the target data source per second. The unit is rows/second.

- Click on Log on the right side of the page: View the execution logs of incremental replication.

- Click on

on the right side of the page: View the latest information.

7 Modify Object Displays the modification records of synchronization objects. - Click on Modify Objects on the right side of the page to configure the synchronization objects.

- Click on

on the right side of the page: View the latest information.

8 Data Comparison Displays the comparison results between the source data source and the target data source. If you have not enabled data comparison, please click on Enable Comparison on the page. - Click on Re-compare on the right side of the page: Re-initiate the comparison between the current source and target data sources.

- Click on Stop on the right side of the page: After the comparison task starts, you can click this button to stop the comparison task immediately.

- Click on Log on the right side of the page: View the execution logs of consistency comparison.

- Click on Monitor (displayed only in data comparison): View the trend chart of RPS (records per second) comparison. Click on Details to view earlier records.

- Click on

in the Actions column on the right side of the comparison list (displayed under the Data tab only in the case of inconsistency): View details of the comparison between the source and target sides.

- Click on

in the Actions column on the right side of the comparison list (displayed only in the case of inconsistency): Generate change SQL. You can directly copy this SQL to the target data source to execute and modify the inconsistent content.

9 Expand Display detailed information of the current replication task. Common Options: - Export table configuration: Export the current task's database and table configuration, allowing for quick import when creating a new replication task. This helps rapidly establish multiple replication links with the same replication objects.

- Alert Rules: Configure the alarm strategy for the current task.

Appendix 1: Data Type Mapping Table

| Type | OceanBase MySQL Data Type | Datahub Default Mapping Data Type |

|---|---|---|

| Numeric Types | BOOL, BOOLEAN | BOOLEAN |

| TINYINT | TINYINT | |

| SMALLINT | SMALLINT | |

| MEDIUMINT | MEDIUMINT | |

| INT | INT | |

| BIGINT | BIGINT | |

| DECIMAL, DOUBLE | DECIMAL | |

| FLOAT | FLOAT | |

| DOUBLE | DOUBLE | |

| BIT | STRING | |

| Date Types | DATETIME (without time zone), TIMESTAMP (with time zone) | TIMESTAMP |

| DATE | STRING | |

| TIME | STRING | |

| YEAR | INT | |

| Character Types | CHAR (up to 256), VARCHAR (up to 262144), BINARY (up to 256), VARBINARY (up to 1048576), ENUM, SET | STRING |

| Large Objects | TINYBLOB (up to 255), BLOB (up to 65535), MEDIUMBLOB (up to 16777215), LARGEBLOB (up to 536870910), TINYTEXT (up to 255), TEXT (up to 65535), MEDIUMTEXT (up to 16777215), LARGETEXT (up to 536870910) | STRING |

| JSON Type | JSON | STRING |

| Spatial Types | GEOMETRY, POINT, LINESTRING, POLYGON, MULTIPOINT, MULTISTRING, MULTIPOLYGON, GEOMETRYCOLLECTION | STRING |

Appendix 2: Pre-Check Item List

| Check Item | Check Content |

|---|---|

| Source Data Source Check | Check the gateway status of the source data source, instance reachability, and accuracy of username and password |

| Target Data Source Check | Check the gateway status of the target data source, instance reachability, and accuracy of username and password |

| Source Database Permission Check | Check if the account permissions of the source database meet the requirements |

| Target Database Data Existence Check | Check if there is existing data for the objects to be replicated in the target database |

Appendix 3: System Parameter Description

To implement incremental data storage in DataHub, NineData provides a set of default system parameters and metadata fields to identify data characteristics. Here are the specific meanings and usage scenarios of the system parameters.

| Parameter Name | Meaning and Usage |

|---|---|

| ${nd_record_id} | The unique ID for each data record (Record). In UPDATE operations, the record_id must remain the same before and after the update to achieve change association. |

| ${nd_exec_timestamp} | The execution time of the Record operation. |

| ${nd_database_name} | The name of the database to which the table belongs, facilitating data source differentiation. |

| ${nd_table_name} | The name of the table corresponding to the Record, used for precise location of change records. |

| ${nd_operation_type} | The type of Record change operation, with the following values:

|

| ${nd_before_image} | The before-image identifier, indicating that the target Record status is before the change occurs, i.e., the current data has been changed. Values:

|

| ${nd_after_image} | The after-image identifier, indicating that the target Record status is after the change occurs, i.e., the current data is the latest state. Values:

|

| ${nd_datasource} | Data source information: The IP and port number of the data source, in the format ip:port. |