MySQL to Kafka

NineData data replication supports replicating data from MySQL to Kafka, enabling real-time data flow and distribution.

Background Information

Kafka is a distributed message queue with features such as high throughput, low latency, high reliability, and scalability, making it suitable for real-time data processing and distribution scenarios. Therefore, replicating data from MySQL to Kafka can help build a real-time data stream processing system to meet the needs of real-time data processing, data analysis, data mining, business monitoring, and more. In addition, replicating data from MySQL to Kafka can also decouple data, reducing dependencies and couplings between systems and making them easier to maintain and scale.

After data is replicated from MySQL to Kafka, it is stored in the form of a JSON array, which contains detailed information about the fields included in the JSON array. Please refer to the Appendix for more information.

Prerequisites

The source and target data sources have been added to NineData. For instructions on how to add data sources, please refer to Creating a Data Source.

The source database is MySQL or a MySQL-like database, such as MySQL, MariaDB, PolarDB MySQL, TDSQL-C, GaussDB MySQL, and so on.

The target database is Kafka version 0.10 or above.

The source data source must have binlog enabled, and the following binlog-related parameters are set:

binlog_format=ROWbinlog_row_image=FULL

tipIf the source data source is a backup database, to ensure the complete acquisition of binlog logs, the

log_slave_updatesparameter needs to be enabled.

Restrictions

- The data replication function is only for the user databases in the data source, and the system databases will not be replicated. For example:

information_schema,mysql,performance_schema,sysdatabases in MySQL type data sources will not be replicated. - Before performing data synchronization, user need to evaluate the performance of the source data source and the target data source, and it is recommended to perform data synchronization during off-peak time. Otherwise, the full data initialization will occupy a certain amount of read and write resources of the source data source and the target data source, increasing database load.

- It is necessary to ensure that each table in the synchronization object has a primary key or unique constraint, and the column name is unique, otherwise the same data may be synchronized repeatedly.

Operation steps

NineData’s data replication product has been commercialized. You can still use 10 replication tasks for free, with the following considerations:

Among the 10 replication tasks, you can include 1 Incremental task, with a specification of Micro.

Tasks with a status of Terminated do not count towards the 10-task limit. If you have already created 10 replication tasks and want to create more, you can terminate previous replication tasks and then create new ones.

When creating replication tasks, you can only select the Spec you have purchased. Specifications that have not been purchased will be grayed out and cannot be selected. If you need to purchase additional specifications, please contact us through the customer service icon at the bottom right of the page.

Log in to the NineData Console.

Click on Replication > Data Replication in the left navigation bar.

On the Replication page, click Create Replication in the upper right corner.

On the Source & Target tab, configure the parameters according to the table below, and click Next.

Parameter Description Name Enter a meaningful name for the data synchronization task, which will make it easier to search for and manage later. Supports up to 64 characters. Source Select the MySQL data source where the data to be copied is located as the synchronization object. Target Select the target Kafka data source that will receive the synchronized objects. Kafka Topic Select the target Kafka Topic, where the data from MySQL will be written to. Delivery Partition When delivering data to a Topic, you can specify which partition the data should be delivered to. - Deliver All to Partition 0: Deliver all data to the default partition 0.

- Deliver to different partition by the Hash value of [databaseName + tableName]: Hash the data and distribute it to different partitions. The system will use the hash value of the database name and table name to determine which partition the target data will be delivered to, ensuring that data from the same table is delivered to the same partition during the hash delivery process.

Type Supports two types of migration: Full and Incremental. - Full: Copy all objects and data from the source data source, i.e. full data replication.

- Incremental: After the full replication is completed, perform incremental replication based on the logs of the source data source. Click the

icon to uncheck certain operation types according to your needs. Once unchecked, these operations will be ignored during incremental synchronization.

Incremental Started Only required when Type is Incremental. - From Started: Perform incremental replication based on the start time of the current replication task.

- Customized Time: Select the time point when incremental replication begins. You can select the time zone according to your business location as needed. If the time point is configured before the start of the current replication task, the replication task will fail if there are any DDL operations in that time period.

Target Table Preparation Target Table Exists Data - Pre-Check Error and Stop Task: Stop the task when data is detected in the target table during the pre-check phase.

- Ignore the existing data and append : Ignore the conflicting data detected in the target table during the pre-check phase and append other data to it.

- Clear the existing data before write: Delete the conflicting data detected in the target table during the pre-check phase and rewrite it.

On the Objects tab, configure the following parameters, and then click Next.

Parameter Description Replication Objects Select the content to be copied. You can choose Full Instance to copy all content from the source database, or choose Customized Object, select the content to be copied in the Source Object list, and click > to add it to the Target Object list on the right. Blacklist (optional) Click Add to add a blacklist record. Select the database or object to be added to the blacklist. These contents will not be copied. It is used to exclude certain databases or objects in the full database replication of Customized Object or Full Instance. - Left drop-down box: Select the name of the database to be added to the blacklist.

- Right drop-down box: Select the object in the corresponding database. You can click multiple objects for multiple selections. Leave it blank to add the entire database to the blacklist.

On the Mapping tab, you can configure each column that needs to be copied to Kafka separately. By default, all columns of the selected table will be copied.

On the Pre-check tab, wait for the system to complete the pre-check. After the pre-check passes, click Launch.

tip- If the pre-check fails, click Details in the Actions column to the right of the target check item, troubleshoot the cause of the failure, manually fix it, and then click Check Again to run the pre-check again until it passes.

- Check items with a Warning status in Result can be repaired or ignored based on specific circumstances.

On the Launch page, when prompted with Launch Successfully, the synchronous task begins to run. At this point, you can perform the following operations:

- Click View Details to view the execution status of each stage of the synchronous task.

- Click Back to list to return to the Replication task list page.

View Synchronization Results

Log in to the NineData Console.

Click on Replication > Data Replicationin the left-hand navigation menu.

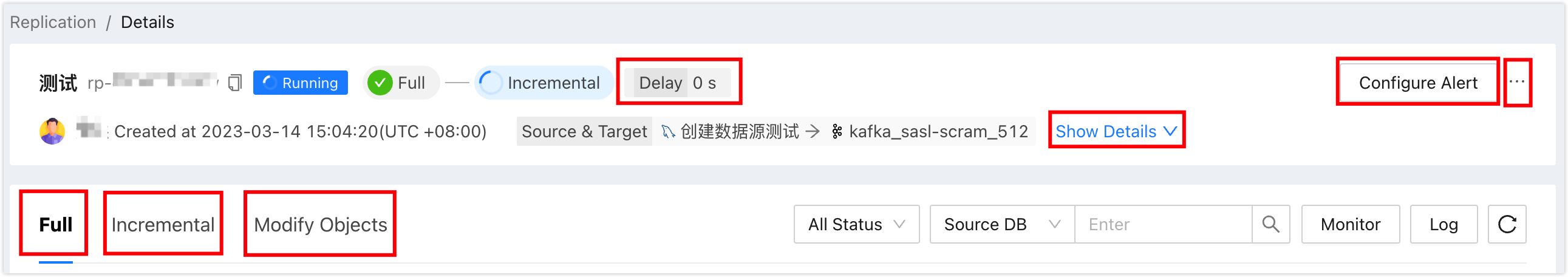

On the Replication page, click on the ID of the target synchronization task to open the Details page, which is explained as follows:

Number Function Explanation 1 Synchronization Delay The data synchronization delay between the source and target data sources. 0 seconds means there is no delay between the two ends, which means that the data on the Kafka side has caught up with the MySQL side. 2 Configuration Alert After configuring the alert, the system will notify you in the way you choose when the task fails. For more information, see Introduction to Operation and Maintenance Monitoring. 3 More - Pause: Pause the task. Only tasks in Running status are optional.

- Terminate: End the unfinished or listening (i.e., incremental synchronization) task. After the task is terminated, the task cannot be restarted, so please operate with caution. If the synchronization object contains triggers, the trigger copying option will pop up. Please select as needed.

- Delete: Delete the task. Once the task is deleted, it cannot be restored, so please operate with caution.

4 Full Copy (Displayed in the case of full copy) Display the progress and details of the full copy. - Click on Monitor on the right side of the page to view various monitoring indicators during full copy. During full copy, you can also click on Flow Control Settings on the right side of the monitoring indicator page to limit the rate of writing to the target data source per second. The unit is MB/s.

- Click on Log on the right side of the page to view the execution log of the full copy.

- Click on the

icon on the right side of the page to view the latest information.

5 Incremental Copy (Displayed in the case of incremental copy) Display various monitoring indicators of incremental synchronization. - Click on Flow Control Settings on the right side of the page: limit the rate of writing to the target data source per second. The unit is rows/second.

- Click on Log on the right side of the page: view the execution log of incremental replication.

- Click on the

icon on the right side of the page: view the latest information.

6 Modify Object Display the modification records of the synchronized object. - Click on Modify Objects on the right side of the page to configure the synchronized object.

- Click on the

icon on the right side of the page to view the latest information.

7 Expand Display detailed information of the current replication task, including Type, Replication Objects, Started, etc.

Appendix: Data Format Specification

Data migrated from MySQL to Kafka will be stored in JSON format, and the system will evenly divide the data in MySQL into multiple JSON objects, with each JSON representing a message.

- During the full copy phase, the number of MySQL data rows stored in a single message is determined by the message.max.bytesmessage.max.bytes is the maximum message size allowed in the Kafka cluster, with a default value of `1000000` bytes (1 MB). You can adjust this value by modifying the `message.max.bytes` parameter in the Kafka configuration file, allowing each message to store more MySQL data rows. Please note that setting this value too large may cause a decrease in Kafka cluster performance, as Kafka needs to allocate memory space for each message. parameter.

- During the incremental copy phase, a single message stores one row of MySQL data.

Each JSON object contains the following fields:

| Field Name | Field Type | Field Description | Field Example |

|---|---|---|---|

| serverId | STRING | The data source information to which the message belongs, in the format: <connection_address:port>. | "serverId":"47.98.224.21:3307" |

| id | LONG | The Record id of the message. This field globally increments and serves as a judgment basis for duplicate message consumption. | "Id":156 |

| es | INT | Different task stages represent different meanings:

| "es":1668650385 |

| ts | INT | The time when more data was delivered to Kafka, represented as a Unix timestamp. | "ts":1668651053 |

| isDdl | BOOLEAN | Whether the data is DDL, with values:

| "is_ddl":true |

| type | STRING | The type of data, with values:

| "type":"INIT" |

| database | STRING | The database to which the data belongs. | "database":"database_name" |

| table | STRING | The table to which the data belongs. If the object corresponding to the DDL statement is not a table, the field value is null. | "table":"table_name" |

| mysqlType | JSON | The data type of the data in MySQL, represented as a JSON array. | "mysqlType": {"id": "bigint(20)", "shipping_type": "varchar(50)" } |

| sqlType | JSON | Reserved field, no need to pay attention. | "sqlType":null |

| pkNames | ARRAY | The primary key names corresponding to the record (Record) in the Binlog. Values:

| "pkNames": ["id", "uid"] |

| data | ARRAY[JSON] | The data delivered from MySQL to Kafka, stored in a JSON format array.

| `"old": [{ "name": "someone", " |

| old | ARRAY[JSON] | Records the incremental replication details from MySQL to Kafka.

For other operations, the value of this field is null. | "old": [{ "name": "someone", "phone": "(737)1234787", "email": "someone@example.com", "address": "somewhere", "country": "china" }] |

| sql | STRING | If the current data is an incremental DDL operation, records the SQL statement corresponding to the operation. For other operations, the value of this field is null. | "sql":"create table sbtest1(id int primary key,name varchar(20))" |

Appendix 2: Checklist of Pre-Check Items

| Check Item | Check Content |

|---|---|

| Source Data Source Connection Check | Check the status of the source data source gateway, instance accessibility, and accuracy of username and password |

| Target Data Source Connection Check | Check the status of the target data source gateway, instance accessibility, and accuracy of username and password |

| Source Database Permission Check | Check if the account permissions in the source database meet the requirements |

| Check if Source Database log_slave_updates is Supported | Check if log_slave_updates is set to ON when the source database is a slave |

| Source Data Source and Target Data Source Version Check | Check if the versions of the source database and target database are compatible |

| Check if Source Database is Enabled with Binlog | Check if the source database is enabled with Binlog |

| Check if Source Database Binlog Format is Supported | Check if the binlog format of the source database is 'ROW' |

| Check if Source Database binlog_row_image is Supported | Check if the binlog_row_image of the source database is 'FULL' |

| Target Database Permission Check | Check if the Kafka account has access permissions for the Topic |

| Target Database Data Existence Check | Check if data exists in the Topic |