Create a Kafka Data Source

NineData supports adding various types and environments of data sources to the console for unified management. You can use database DevOps, backup and recovery, data replication, and database comparison features for data sources that have been added. This article introduces how to add a Kafka source to NineData.

Prerequisites

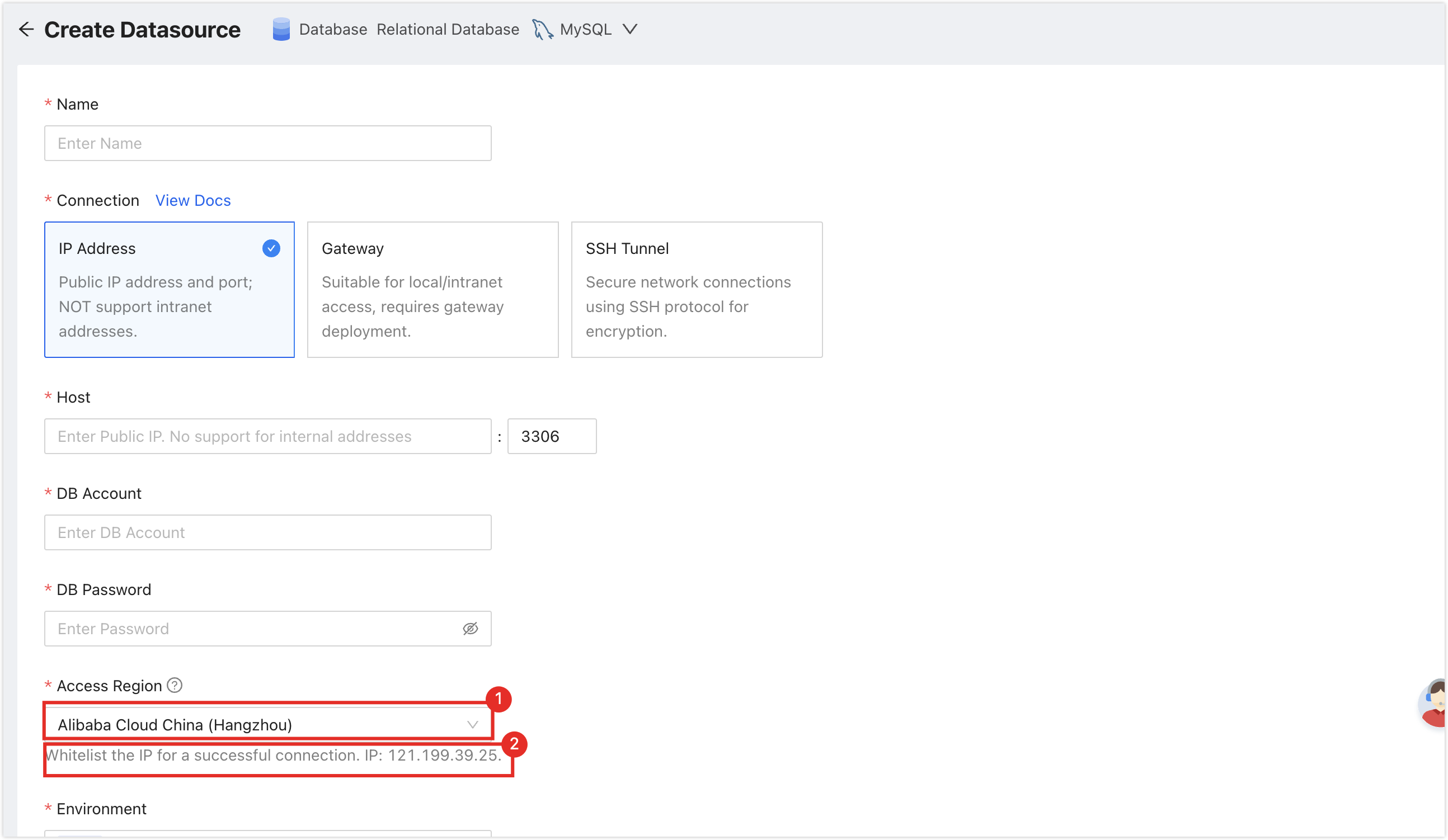

The server IP address of NineData has been added to the data source allowlist. Please refer to the image below for instructions on how to obtain the server IP address.

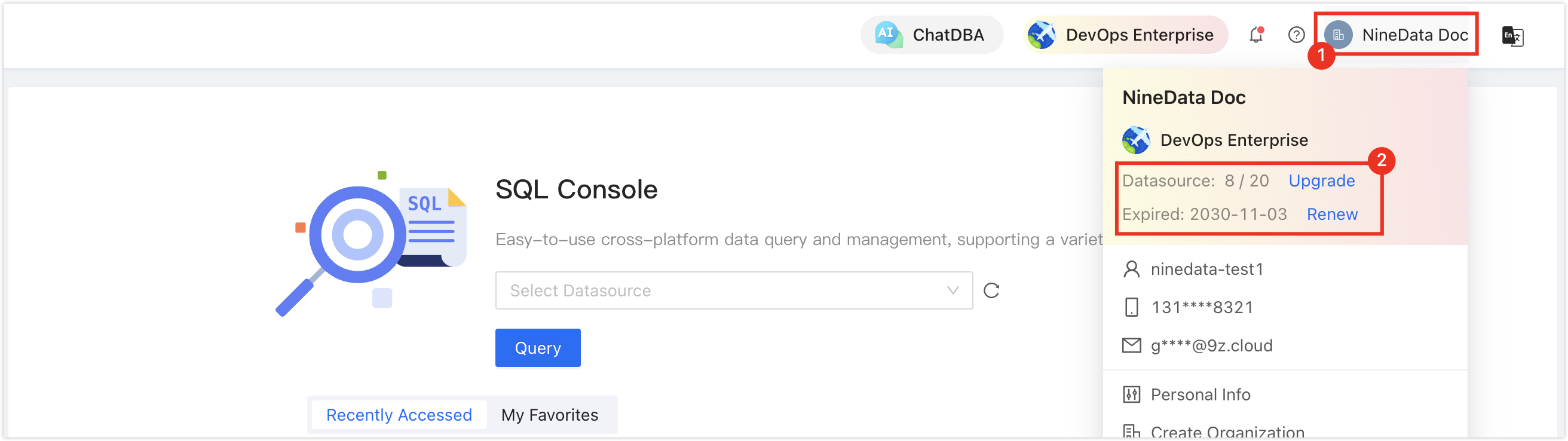

Make sure you have available data source quota; otherwise, the data source cannot be added. You can quickly check your remaining quota at the top-right corner of the NineData console.

Operation Steps

Log in to the NineData Console.

On the left navigation pane, click Datasource > Datasource.

- Click Datasource tab, and click Create Datasource on the page. In the popup window for selecting the data source type, choose Database > (the type of data source to be added), and configure the parameters based on the table below on the Create Datasource page.tip

If you make a mistake during the operation, you can click the

icon at the top of the Create Datasource page to make a new selection.

Configure the data source parameters:

Parameter Description Name Enter the name of the data source. To facilitate subsequent search and management, please use meaningful names. Connection Select the access method of the data source. Supports access through IP Address, Gateway, SSH Tunnel three methods. - IP Address: Access the data source through a public network address.

- Gateway: A secure and fast intranet access method provided by NineData. First, you need to connect the host where the data source is located. For the connection method, please refer to Add Gateway.

- SSH Tunnel: Access the data source through an SSH tunnel.

- IP Address: Access the data source through a public network address.

- SSH Tunnel: Access the data source through an SSH tunnel.

Configuration items when Connection is IP Address Broker List: A Kafka cluster composed of multiple Broker connection addresses, each Broker is an independent Kafka server instance. You need to enter the public network connection address and port of the Kafka data source below. If there are multiple Brokers, click the Add button to continue filling in. Configuration items when Connection is Gateway - Gateway: Select the NineData gateway installed on the host where the data source is located.

- Broker List: Enter the intranet connection address and port of the Kafka data source. Broker can be written as localhost (the data source is on the local machine) or the intranet IP of the host where the data source is located.

Configuration items when Connection is SSH Tunnel - SSH Host: Enter the public IP or domain name of the server where the target data source is located, and the corresponding port number (the default port number for SSH service is 22).

- SSH Authentication Method: Select the SSH authentication method.

- Password: Connect through SSH Username (i.e., the server's login name) and Password (i.e., the server's login password).

- SSH Username: Enter the login username of the server where the target data source is located.

- Password: Enter the login password of the server where the target data source is located.

- Key (recommended): Connect through SSH Username and Key File.

- SSH Username: Enter the login username of the server where the target data source is located.

- Key File: Click Upload to upload the private key file, which is a key file without a suffix. If you have not created one yet, please refer to Generate SSH Tunnel Key File.

- Password: Enter the password set when generating the key file. If you did not set a password during the key generation process, leave this field blank.

Note: After the SSH configuration is completed, you need to click the Connection Test on the right, there may be the following two results: - Password: Connect through SSH Username (i.e., the server's login name) and Password (i.e., the server's login password).

- Prompt Connection Successfully: Indicates that the SSH Tunnel has been opened.

- Prompt error message: Indicates the connection failed, you need to troubleshoot the cause of the error according to the error message and retry.

- Broker List: Enter the intranet connection address and port of the Kafka data source. Broker can be written as localhost (the data source is on the local machine) or the intranet IP of the host where the data source is located.

Authentication Method Select the Kafka authentication method. - PLAINTEXT: No authentication information is required, data is transmitted in plaintext, this method is usually suitable for development and testing environments.

- SASL/PLAIN (default): A SASL-based authentication method that requires a username and password, data is transmitted in plaintext, it is recommended to use this method on encrypted transmission networks.

- SASL/SCRAM-SHA-256: A SASL-based authentication method that requires a username and password, uses the SHA-256 algorithm to hash the password, providing higher security.

- SASL/SCRAM-SHA-512: A SASL-based authentication method that requires a username and password, uses the SHA-512 algorithm to hash the password, providing higher security.

Username Kafka username. Password Kafka password. Access Region Select the region closest to your data source to effectively reduce network latency. Environment Choose according to the actual business purpose of the data source, as an environmental identifier for the data source. Default provides PROD and DEV environments, and supports you to create a custom environment.

Note: Under the organization mode, the database environment can also be applied to permission policy management, for example, the default Prod Admin role only supports access to data sources in the PROD environment and cannot access data sources in other environments. For more information, please refer to Manage Roles.SSL Whether to use SSL encryption to access the data source (default off). If the data source enforces SSL encrypted connections, this switch must be turned on, otherwise the connection fails. Click the > to the left of Encryption to expand the detailed configuration: - Trust Store: The trusted certificate issued by the CA, i.e., the server-side certificate. This certificate is used to verify the identity of the server to ensure that the Kafka service you are connecting to is trusted.

- Trust Store Password: The password used to protect the Trust Store certificate.

- Key Store: The certificate used to verify user identity, i.e., the client-side certificate. This certificate ensures that the user connecting to Kafka is a trusted user.

- Key Store Password: The password used to protect the Key Store certificate.

After all configurations are completed, click the Connection Test next to Create Datasource to test whether the data source can be accessed normally. If prompted with Connection Successfully, you can click Create Datasource to complete the addition of the data source. Otherwise, please recheck the connection settings until the connection test is successful.

Appendix: Add NineData's IP address to the Kafka database whitelist

When adding a data source located in On-Premise/Other Cloud, you need to add the IP address of the NineData service to the database whitelist to allow NineData to provide services.

This section takes Kafka version 3.3.2 as an example to introduce how to add an IP whitelist.

Open Kafka's configuration file

server.properties, which is usually located at:<Kafka installation directory>/config/server.properties.Find the

listenersparameter and set its value to the IP address and port number that allow access to Kafka. For example, if you want to allow NineData to access Kafka, you can set it to:listeners=PLAINTEXT://121.199.39.25:9092Save the changes and restart the Kafka service.